So I

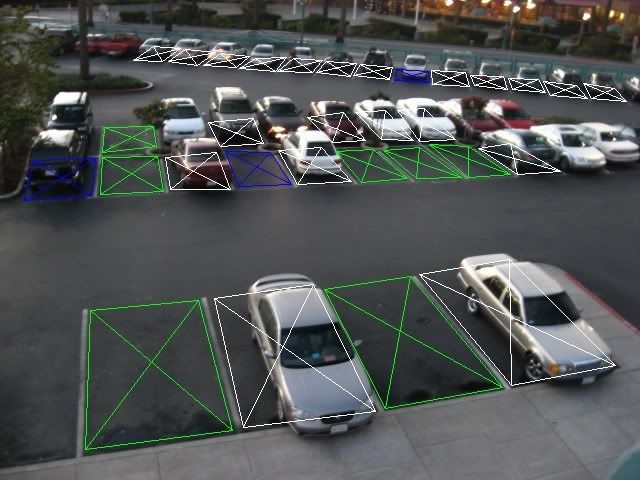

finally finished my color

histogramming program. Since training the support vector machine is completely pointless unless the data you are training it on is accurate, I had to make sure that my '

histogrammer' was 100% bug free. Here are some of the test images I used to debug the program:

Currently, the program works by reading in the log file which contains a list of coordinate-image lines to extract the pixels from a particular region of an image. For each line in the log file the program does the following:

- Compute the extraction region in the image.

- Convert the image from RGB to L*a*b*.

- Create 2 32-bin histograms, one for the 'a' channel and one for the 'b' channel (we discard the 'L' channel as it does not add much useful information in this case).

- Compute the histograms.

- Write out each histogram, bin by bin, to the resulting text file along with a 0 or a 1 to indicate if the region was a positive or negative training example.

With a log file of exactly 800 training regions, the program only took a couple of minutes to finish its calculations.

My next step will be to train a support vector machine on this training data. Since I'm most familiar with

SVMLight, I'm probably going to start there.

Currently, the program works by reading in the log file which contains a list of coordinate-image lines to extract the pixels from a particular region of an image. For each line in the log file the program does the following:

Currently, the program works by reading in the log file which contains a list of coordinate-image lines to extract the pixels from a particular region of an image. For each line in the log file the program does the following: